Technology of the future: Could deepfakes replace VFX?

Films can be an escape from reality, taking you on unimaginable journeys, even putting you in the middle of an epic battle happening in space or underwater.

Filmmakers increasingly rely on visual effects (VFX) to take us there, as their fantastical visions become impossible - or astronomically expensive - to capture on camera.

While special effects have been around since the dawn of film more than 130 years ago, the use of computer generated imagery began in the 70s, and they began to revolutionise mainstream filmmaking in blockbusters like The Abyss, Jurassic Park, and Terminator 2 which were all considered breakthrough CGI movies.

But though it seems to be an unshakeable and impenetrable industry, it is ultimately incredibly costly and there is a new AI technology that may be able to reduce the price and could even eliminate the need of some of the artists.

What makes VFX difficult?

Allar Kaasik, senior VFX tutor at Pearson College London, told Yahoo Movies UK: “It requires a large set of artistic and technical skills, ranging from sculpting, painting and animation to algorithm design, maths, physics and biology.

“The artist takes into consideration the underlying bone and muscle structures, which are animated under the constraints of what real human faces are capable of.

“The model is then textured to give the skin a realistic colour and surface appearance, which includes complex algorithms calculating how light should reflect off the skin or scatter underneath the skin in the softer parts of tissue, such as the ears or the nose.

“It needs to be lit by lights in 3D space to match the lights in the real scene and after rendering the model into an image sequence (a video) a compositing artist has to combine it with the original footage of the real actress or stand-in.”

Thanks to the technological advances of VFX, we can now make people younger again, like Robert Downey Jr in Captain America: Civil War, or even bring someone back to life - like Carrie Fisher as Princess Leia in Rogue One: A Star Wars Story.

However, there is a new form of computer-generated imagery that could potentially change the industry - deepfakes.

What is a deepfake?

A portmanteau of "deep learning" - an AI technique where algorithms are inspired by the structure and function of the brain called artificial neural networks - and "fake", deepfakes have exploded over the internet in just a few years.

They first entered the public eye late 2017, when an anonymous Redditor under the name “deepfakes” began uploading videos of celebrities like Scarlett Johansson stitched onto the bodies of pornographic actors, while non-pornographic content included many videos with actor Nicolas Cage’s face swapped into various movies.

Recently, a deepfake of Mark Zuckerburg emerged on Instagram. It was created by artists Bill Posters and Daniel Howe and shows the Facebook founder sitting at a desk, seemingly giving a sinister speech about the company's power.

There have also been fears of influence in elections, where a doctored video of a candidate saying the most ludicrous things could be interpreted as being true and could therefore discredit them.

Read more: 10 Movies With Unfinished Special Effects Shots

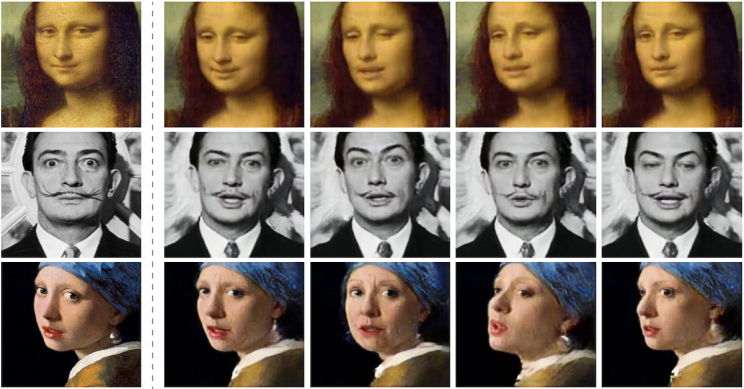

Deepfakes learn about faces differently to VFX, instead of creating a face from scratch, a program is trained to learn that most people have two eyes, a nose, mouth and the variations between each person.

Then it’s given a large set of images of a face from different angles - the program works better with celebrities because they’re so well documented and photographed - and the final step the algorithm is given a source video with another person and the AI replaces the face with one that has been generated from what it knows the celebrity looks like.

And it does it remarkably well, matching the actions, head pose, facial expressions and lighting of the scene.

Allar said: “Every technology always brings with it some disruption. The main issue with deepfake technology and a lot of AI approaches it that they work a little bit like "black boxes".

“You give them an input and they give you a result, but there is very little that you can do to control what result you are going to get. So it doesn't come with options for tweaking or changes.

“However, if this technology is developed further, then some of those extra parameters may be added at some point and someone might develop software packages that professionals could use as a small part of their pipeline.”

Although the precise price of post-production is not known, it is thought for blockbusters the cost of VFX could be in the hundreds of thousands, even millions of pounds, while a decent deepfake can be created on a £500 computer.

“Depending on the scale of the production team of artists can sometimes work on one shot for the length of the whole post-production process which can take between 1-2 years,” said Allar. “So there can be teams of people working full-time for months on end, earning specialist salaries and working with high-end professional technology and software.

“There are software packages to generate deepfakes that are available for free and can be used to generate a reasonably good looking video within a day on a good gaming computer.

“To properly implement a deepfake algorithm from scratch, a thorough understanding of machine learning algorithms and linear algebra is needed with good computing power to train the AIs on, but an enthusiast can get started with a simple internet search and be up and running in a couple of hours by using the existing software solutions that are out there.”

So will deepfakes eliminate the need for VFX production studios?

“Deepfakes are not going to replace the need for VFX productions studios if the output needs to be high-end film or commercials, because a VFX production studio can give you a better guarantee of delivering great results,” commented Allar.

“Where deepfakes might get used in VFX is for quicker face replacements, such as getting the main actress's face onto the stunt double when they're quite small in the shot.

“Likewise, it could make it easier to do crowd duplication shots, when you only have a number of extras, but you need to vary the faces.

“For any close-up work, the deepfake technology is missing the parameters that the artist could dial to try to improve the result or change it according to what the director wants.”

Yahoo Movies

Yahoo Movies